23 How to Think about Research

This brings us to my third point, which is how to think about research articles. People tend to think that newer is better with everything. Sometimes this is true: new phones are better than old phones and new textbooks are often more up-to-date than old textbooks. But the understanding many students have about scholarly articles is that the newer studies “replace” the older studies. You see this assumption in the headline: “It’s Official: European Scientific Journal Concludes…”

In general, that’s not how science works. In science, multiple conflicting studies come in over long periods of time, each one a drop in the bucket of the claim it supports. Over time, the weight of the evidence ends up on one side or another. Depending on the quality of the new research, some drops are bigger than others (some much bigger), but overall it is an incremental process.

As such, studies that are consistent with previous research are often more trustworthy than those that have surprising or unexpected results. This runs counter to the narrative promoted by the press: “news,” after all, favors what is new and different. The unfortunate effect of the press’s presentation of science (and in particular science around popular issues such as health) is that they would rather not give a sense of the slow accumulation of evidence for each side of an issue. Their narrative often presents a world where last month’s findings are “overturned” by this month’s findings, which are then, in turn, “overturned” back to the original finding a month from now. This whiplash presentation “Chocolate is good for you! Chocolate is bad for you!” undermines the public’s faith in science. But the whiplash is not from science: it is a product of the inappropriate presentation from the press.

As a fact-checker, your job is not to resolve debates based on new evidence, but to accurately summarize the state of research and the consensus of experts in a given area, taking into account majority and significant minority views.

For this reason, fact-checking communities such as Wikipedia discourage authors from over-citing individual research, which tends to point in different directions. Instead, Wikipedia encourages users to find high quality secondary sources that reliably summarize the research base of a certain area, or research reviews of multiple works. This is good advice for fact-checkers as well. Without an expert’s background, it can be challenging to place new research in the context of old, which is what you want to do.

Here’s a claim (two claims, actually) that ran recently in the Washington Post:

The alcohol industry and some government agencies continue to promote the idea that moderate drinking provides some health benefits. But new research is beginning to call even that long-standing claim into question.

Reading down further, we find a more specific claim: the medical consensus is that alcohol is a carcinogen even at low levels of consumption. Is this true?

The first thing we do is look at the authorship of the article. It’s from the Washington Post, which is a generally reliable publication, and one of its authors has made a career of data analysis (and actually won a Pulitzer prize as part of a team that analyzed data and discovered election fraud in a Florida mayoral race). So one thing to think about is that these people may be better interpreters of the data than you. (Key thing for fact-checkers to keep in mind: You are often not a person in a position to know.)

But suppose we want to dig further and find out if they are really looking at a shift in the expert consensus, or just adding more drops to the evidence bucket. How would we do that?

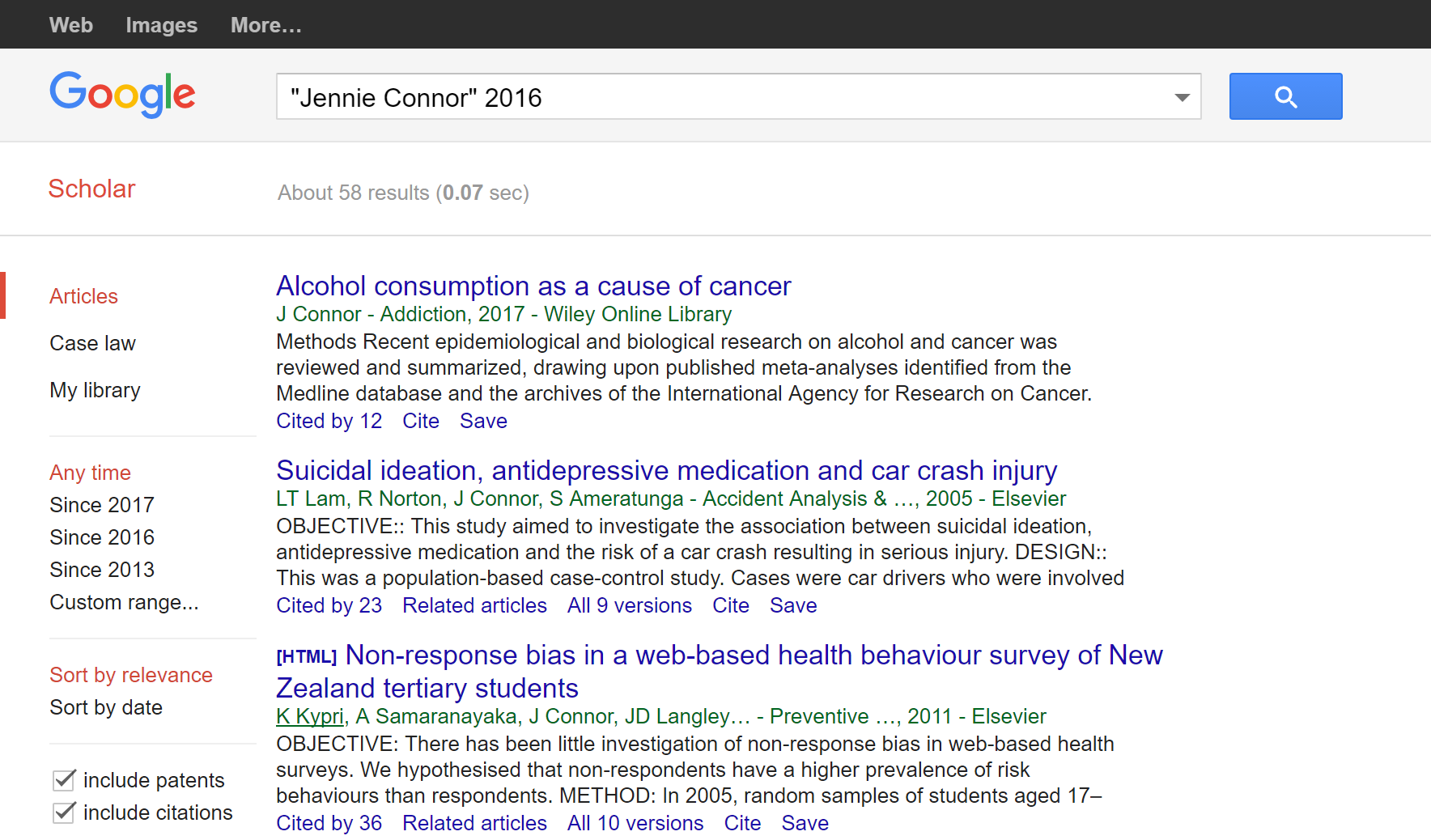

First, we’d sanity check where the pieces they mention were published. The Post article mentions two articles by “Jennie Connor, a professor at the University of Otago Dunedin School of Medicine,” one published last year and the other published earlier. Let’s find the more recent one, which seems to be a key input into this article. We go to Google Scholar and type in “‘Jennie Connor’ 2016”:

As usual, we’re scanning quickly to get to the article we want, but also minding our peripheral vision here. So, we see that the top one is what we probably want, but we also notice that Connor has other well-cited articles in the field of health.

What about this article on “Alcohol consumption as a cause of cancer”? It was published in 2017 (which is probably the physical journal’s publication date, the article having been released in 2016). Nevertheless, it’s already been cited by twelve other papers.

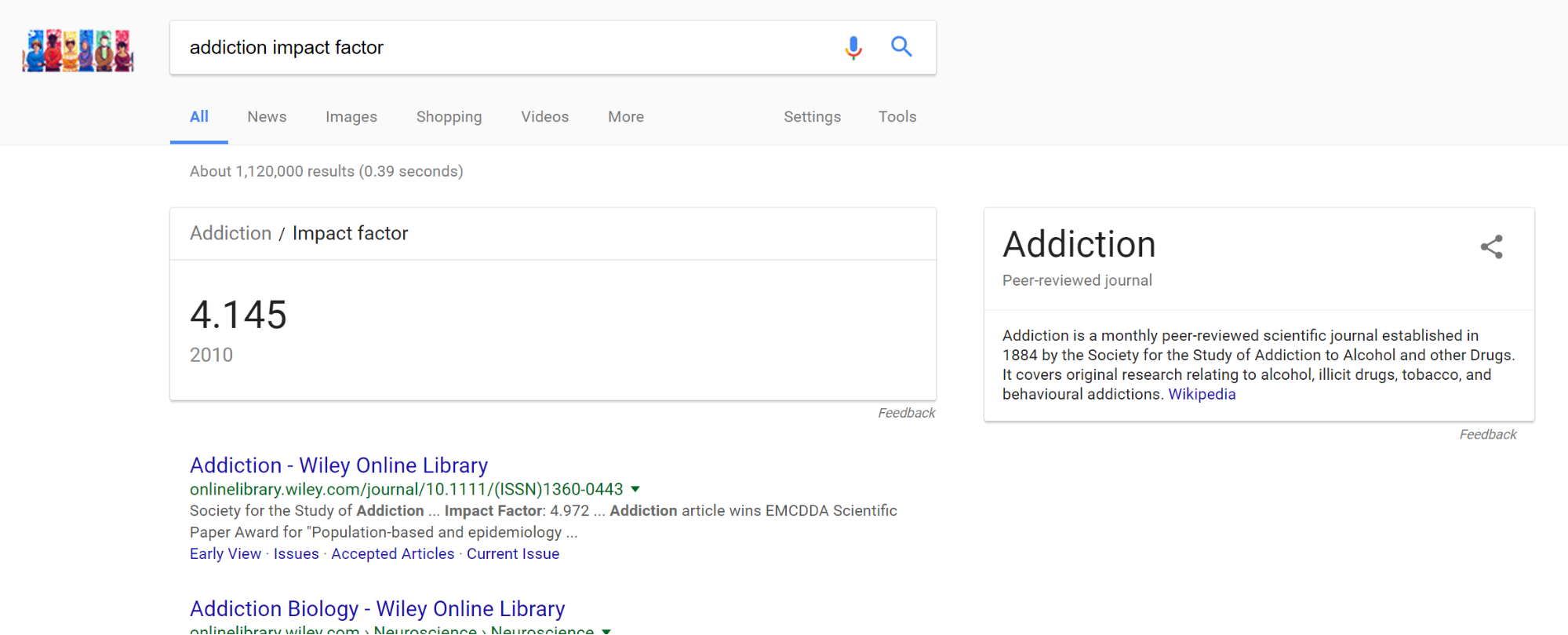

What about this publication Addiction? Is it reputable?

Let’s take a look with an impact factor search.

Yep, it looks legit. We also see in the knowledge panel to the right that the journal was founded in the 1880s. If we click through to that Wikipedia article, it will tell us that this journal ranks second in impact factor for journals on substance abuse.

Again, you should never use impact factor for fine-grained distinctions. What we’re checking for here is that the Washington Post wasn’t fooled into covering some research far out of the mainstream of substance abuse studies, or tricked into covering something published in a sketchy journal. It’s clear from this quick check that this is a researcher well within the mainstream of her profession, publishing in prominent journals.

Next we want to see what kind of article this is. Sometimes journals publish short reactions to other works, or smaller opinion pieces. What we’d like to see here is that this was either new research or a substantial review of research. We find from the abstract that it is primarily a review of research, including some of the newer studies. We note that it is a six-page article, and therefore not likely to be a simple letter or response to another article. The abstract also goes into detail about the breadth of evidence reviewed.

Frustratingly, we can’t get our hands on the article, but this probably tells us enough about it for our purposes.